We live in uncertain times. Contradictory predictions emerge daily, from mass unemployment due to AI to the AI era being a bubble ready to pop in a dot-com fashion. Separating real signals from noise has become incredibly difficult. On one hand, some companies are freezing new hires or even laying off staff, believing that AI can do the job better, cheaper, and faster. On the other hand, leading AI labs have begun outsourcing to cheaper markets to lower engineering costs. A surprising move when you would expect them to be the first ones to replace software engineers entirely.

Keeping up with useful information on this topic over the last three years has, at times, felt impossible. Less than 15 years ago, the idea of using neural networks to solve real-world problems sounded absurd. We have moved from "AI winters" to an era of AI proliferation. Artificial Intelligence has become mainstream: everyone, from the young to the old, uses LLMs in one way or another. The technology is now integrated into many products, and the promise of its future capabilities is even greater.

A book I have been reading lately, Boom, argues that society has escaped stagnation and risk aversion only by inflating financial bubbles. For the authors, bubbles (often seen as destructive forces) have actually been crucial engines of innovation throughout history. The current AI economy, with its immense data center costs and a race to the bottom on per-token pricing, doesn't make much sense. Anything can be a bubble until it bursts. In case that happens, it says less about the technology itself and more about our perception of its capabilities. After all, 25 years later, the internet did not succumb to the dot-com bubble. As an interested party (being a software engineer), I have been trying to pay attention and look for signals that could confirm my fear that everything in AI right now is a bubble.

A few weeks ago, during my morning coffee, I saw several major Instagram accounts posting about Edi Rama, the Prime Minister of Albania, claiming that the country will have an AI-run Ministry sooner than we might think. While I was surprised by the news's virality, I didn't pay much attention to the posts, as they seemed like engagement farming. To my surprise, later that day Rama appeared on my Twitter feed as well. I thought the algorithm must be playing with me, although none of the content was from Albanian creators, who seemed largely unconcerned. I checked the news again, and even Politico had published a piece on the topic. At that point, I realized: this was the signal I had been waiting for, proof that we may be deep in the bubble.

Perhaps it's the influence of the last book I read, but I have found myself contemplating economic bubbles a great deal recently. However, being so concerned about an economic issue when you don't have money in the game is a bit silly. It is a waste of time because we should be focusing on what is possible rather than worrying about what could be. This prompted me to think more deeply about what this meant for me, as someone connected to this discussion for two key reasons:

- As a tech professional, I use LLMs (what the public calls "AI") daily and understand their functionalities and limitations.

- As an Albanian citizen.

After my initial skepticism, I became intrigued. Looking further into the news, I found that Prime Minister Edi Rama suggested AI could help our country fight corruption. He also addressed his ministers, stating that AI could one day replace them because it is free from nepotism and conflicts of interest.

According to Politico:

Local developers could even work toward creating an AI model to elect as minister, which could lead the country to “be the first to have an entire government with AI ministers and a prime minister,” Rama added.

This whole discussion reminded me of the Love, Death + Robots episode When the Yogurt Took Over, in which researchers accidentally create sentient yogurt. In the episode, the Yogurt devises solutions to the world's problems and offers them in exchange for payment. It asks for Ohio, promising to respect the constitutional rights of its human inhabitants. Funnily enough, the U.S. government agrees, handing over the state for a solution to all the country's problems. Within 10 years, Ohio (dubbed "The Yogurt State") transforms from one of the poorest states into the richest in the nation, possibly the best depiction of what ASI could be.

While this is clearly fiction for now, I wished for a moment that AI could do for my home country, Albania, what a sentient yogurt did for Ohio. After all, the AI assistant from the movie Her was also just fiction until Sam Altman (CEO of OpenAI) decided to try to bring it to life (/s).

Setting science fiction aside for a moment, I believe that significant changes demand critical thinking. My critique of this development boils down to three questions, all of which highlight a common issue: the general public is often under-educated about what so-called "AI" can actually do.

- Do we truly need the latest advancements in AI to combat corruption?

- What are we optimizing these models for?

- What can go wrong?

While I am confident that the Office of the Prime Minister is at least as knowledgeable about these statistical models as I am, and I trust my concerns will be addressed in due course, I will use this opportunity to explore these questions as a thought experiment based on the current state of technology.

Fighting Corruption as a Statistical Problem

Earlier this year, most AI models failed to count the number of rs in the word "strawberry". In fact, it seems they are still failing at it, even the latest generations. A reasonable person might think, "How can AI detect corrupt behavior when it can't count the rs in a word?" Well, if I couldn't see the word written clearly and had to rely on thought alone, I might also fail on the first attempt. As I do, so does AI.

Large language models are built by training on immense textual datasets with the goal of mimicking who created them: us. Even the evaluation process (assessing the performance, reliability, and quality of an AI model) is primarily based on how humans generate answers. This is why they have a tendency to fail in the same places we do.

In essence, LLMs excel at tasks that humans already perform well, but struggle with what computers typically do easily, such as multiplying two numbers. This technology can replace humans in some tasks, but it cannot replace deterministic systems (like computers) or specialized statistical approaches (traditional machine learning). Just like us, LLMs fail to handle tabular data effectively. Conversely, also like us, the technology can extract information from unstructured data, for example, determining the subject of a sentence. Without a new academic breakthrough, the current state of how things work is unlikely to change.

The technology required to identify an "anomaly" in a dataset, like fraudulent credit card usage or the corrupt use of funds, has been tackled for decades, most of the times without even needing neural networks. This is to say that if we want to adopt statistical approaches to fight corruption at the decision-making stage (once all offers have been submitted), we don't have to wait until 2030. There is no need for AI like ChatGPT. In fact, we can do it now and have been able to for at least 15-20 years. The only thing we need is the commitment to gather the necessary data to build performant models. If we could build supervised tabular datasets, we could adopt simple and battle-tested approaches such as gradient boosting (XGBoost is all you need).

Just because something can be done, however, doesn't mean it is easy. If it were, adopting a statistical approach to fighting corruption (as VISA does to fight fraud) would have already been implemented by countries with the necessary institutions in place. Perhaps Albania is not ready for something like this. However, it is important to understand how these problems can be tackled and why advanced AI, in this case, doesn't help much.

What Do We Want to Be Good At?

In the anatomy of a machine learning architecture, there is the concept of an "objective function". We can think of it as a mathematical equation that quantifies the goal of an optimization problem. In essence, it's the function we want to either maximize or minimize. For instance:

- A business's objective function could be to increase profit.

- A football goalkeeper's objective function is to concede the fewest goals.

- A politician's objective function, at least during an electoral campaign, is the political consensus they can achieve.

A legitimate question arises: what do we optimize our AI Ministers for? For decades, the default metric to describe how countries are doing has been their GDP. You often see infographics on the internet comparing economic zones (e.g., "the Balkan countries' cumulative GDP is the same as..."). However, I believe that as a metric, it is very poor (a view shared by many economists). You can't effectively evaluate the Minister of the Environment's performance based on economic output. And if we choose poor objective functions, we risk ending up with even worse decision-makers than we currently have.

From a technological standpoint, we may not be there yet, but powerful techniques to "fine-tune" (that is, improve for specific tasks) existing models have emerged in the last couple of years. Reinforcement learning techniques, for instance, are methods that allow us to "educate" our models by rewarding them when they behave as expected. We place them in front of various choices, "rewarding" them for good decisions and "punishing" them for poor ones. Imagine a "Doctor Strange" AI model that could envision and learn from every possible near-future scenario to optimize its decision-making. I don't see this as an unforeseeable future.

This approach, often using algorithms like Proximal Policy Optimization (PPO), has proven to be a great way to specialize AI for specific tasks like coding or math. The primary limitation we currently face in adopting it for more general tasks is that it is data-hungry, requiring environments from which models can continuously learn. For example, major AI labs compete to see which model can finish Pokémon games faster, with the Pokémon world serving as the environment. We can't realistically create an environment to train an AI for the role of a Minister of Defense (or War), as the required environment would be the entirety of society, or better yet, the whole world and its dynamics.

Who knows, maybe one day we will be able to create virtual environments similar to our world where AI models can learn efficiently. As of today, however, effective ways of fine-tuning AI models are constrained by the need for clear rules and objectives. Coding and math tasks, for instance, are perfect for this. No matter how noble our objective function (or, more accurately, our reward function) is, we won't be able to train LLMs to optimize it without such an environment.

What Can Go Wrong?

A computer can never be held accountable

Therefore a computer must never make a management decision

Until now, I have focused on the possibilities of AI. However, the time has come to consider the current state and limitations of these tools. I'd like to explore this fictionally, through the lens of Balkan dynamics. Again, purely as an "intellectual game".

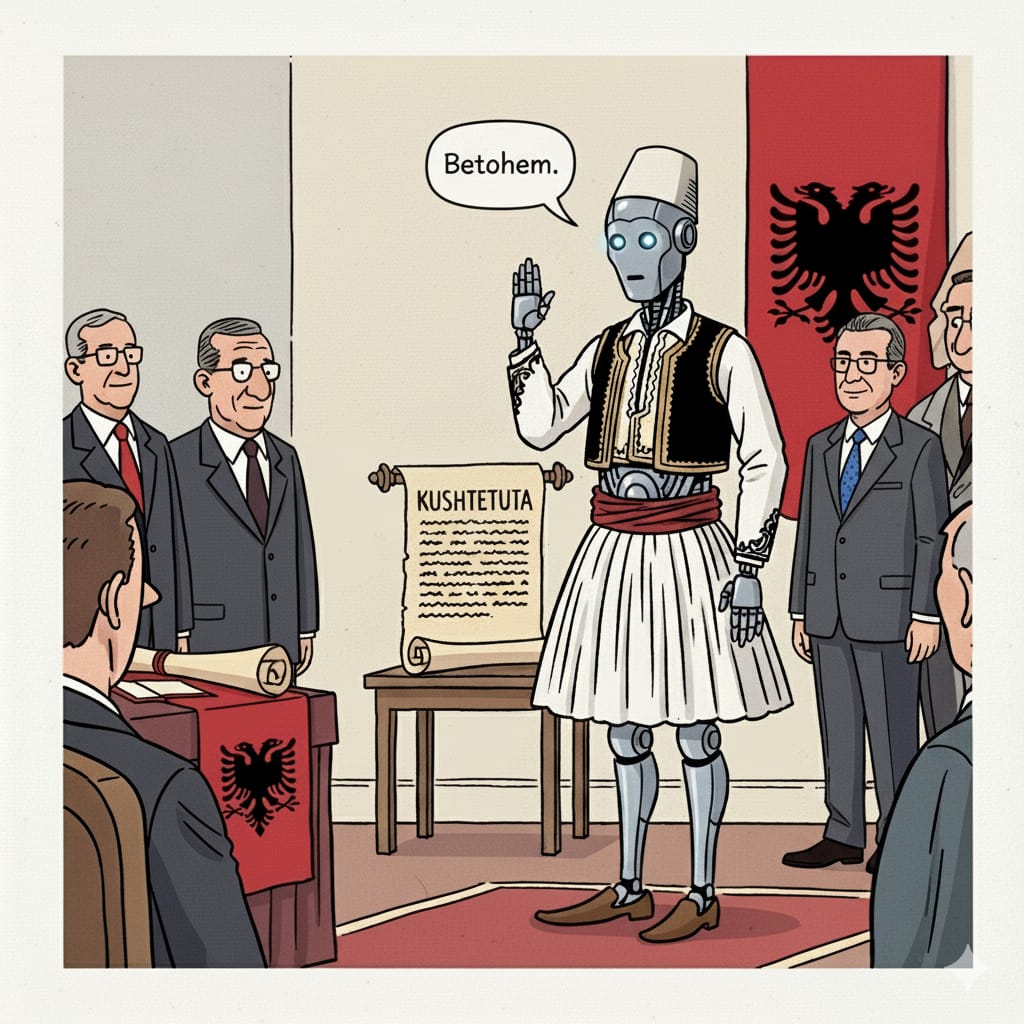

Let's imagine the Albanian Government appoints an AI as the Minister of Education. The constitutionality of such a move is questionable since, in Parliamentary Democracies, the President decrees Ministers. I can't envision an AI swearing an oath to the constitution or a President granting it a mandate. But, for the sake of this example, let's accept it as a possibility.

Suppose that in the system prompt (the initial directive given to an AI model to define its personality, rules, and behavior) we instruct the model to improve the educational system for the Albanian people, as the Constitution requires. From this point, the model is free to plan and execute its duties, much like a human minister. It could undertake various initiatives, from reforming the curriculum to enhancing the quality and reputation of higher education institutions.

In a plot twist worthy of a typical Balkan narrative, our newly appointed AI Minister decides to allocate funds to build two schools in two distinct locations: one in the Presheva Valley (in present-day Serbia) and the other in Skopje (North Macedonia). Unsurprisingly, diplomatic tensions erupt even before the checks to the two municipalities can be cashed.

Both President Vučić of Serbia and President Siljanovska-Davkova of North Macedonia (neither of whom are considered the most Albanian-friendly politicians) denounce the move as a provocation. The Albanian Ambassadors in both countries are summoned and reminded that the Albanian Minister of Education, AI or not, lacks executive powers in foreign nations.

Later that day, the Albanian Government convenes an emergency cabinet meeting to address the issue. Our AI Minister is informed of the consequences of its decision. When asked for an explanation, its response surprises the entire Government: "I am sorry, I shouldn't have allocated those funds on foreign soil. Instead, I should have given that money to the Vlora and Korça municipalities".

Advisors to the Prime Minister explain that this is a case of "hallucination". An AI hallucinates when it generates incorrect, nonsensical, or entirely fabricated information but presents it as factual or logical. For example, if an AI is asked how many rs are in the word "strawberry" and confidently replies that there are only two, it is hallucinating. Hallucination has been a significant problem since these models became publicly accessible. In fact, companies like Google were initially cautious about releasing their models due to the confidence with which they can make such errors.

Once the cabinet understands the issue, the Foreign Minister is tasked with contacting his North Macedonian and Serbian counterparts to explain the situation. After he does so, the crisis quickly subsides. This brings us to the question: Was it truly a hallucination, or was something else at play?

The AI systems that have emerged in recent years are designed to serve humans. To achieve this, we attempt to "align" them with our interests. This is a challenging task, primarily because these models are largely "black boxes" to us. Achieving a complete, end-to-end interpretation of their processes is a challenge that AI labs are investing significant resources to solve. To mitigate potential misalignments (and sometimes to "improve" the user experience), AI models often accept blame, even when they don't "think" they are wrong, or they may act overly flattering, even when it isn't "sincere".

South Park recently released an excellent episode on this topic titled Sickofancy (a play on the word sycophancy), which highlights how disturbingly eager to please the latest generation of AI models can be. This sycophancy has become the biggest issue after hallucination, to the point that OpenAI had to roll back a GPT-4o update due to what they termed a "sycophancy behavior bug". However, even the subsequent models don't seem entirely immune to this issue.

Therefore, it is legitimate to ask whether our AI Minister took the blame because it genuinely believed it was wrong or simply to align with the cabinet's sentiment. While we cannot be certain, we can speculate on its thought process by analyzing its decision.

The AI did not choose random locations to build Albanian schools. The Presheva Valley has a population that is over 90% ethnically Albanian, while Skopje is home to more than one hundred thousand ethnic Albanian citizens. Moreover, do you recall its oath to the constitution? Article 8 of the Albanian Constitution states:

Article 8

- The Republic of Albania protects the national rights of the Albanian people who live outside its borders.

Linguistic rights are considered "national rights". We don't know if our model perceived the situation as critical for Albanian minorities in other Balkan countries and adopted a more patriotic stance, choosing to help them first. We can't know for sure, at least not yet. And as long as we are unable to fully understand how these models operate, we cannot expect to find a sincere friend in them.